In probability theory and statistics, the exponential distribution or negative exponential distribution is the probability distribution of the time between events in a Poisson point process, i.e., a process in which events occur continuously and independently at a constant average rate. It is a particular case of the gamma distribution. It is the continuous analogue of the geometric distribution, and it has the key property of being memoryless. In addition to being used for the analysis of Poisson point processes it is found in various other contexts. The exponential distribution is not the same as the class of exponential families of distributions. This is a large class of probability distributions that includes the exponential distribution as one of its members, but also includes many other distributions, like the normal, binomial, gamma, and Poisson distributions. From the book: Ian Goodfellow, Yoshua Bengio, Aaron Courville - Deep Learning - The MIT Press (2016)

Monday, 27 February 2023

Exponential Probability Distribution

Gaussian Distribution (or Normal Distribution)

From the book: Ian Goodfellow, Yoshua Bengio, Aaron Courville - Deep Learning - The MIT Press (2016) Normal distributions are a sensible choice for many applications. In the absence of prior knowledge about what form a distribution over the real numbers should take, the normal distribution is a good default choice for two major reasons. First, many distributions we wish to model are truly close to being normal distributions. The central limit theorem shows that the sum of many independent random variables is approximately normally distributed. This means that in practice, many complicated systems can be modeled successfully as normally distributed noise, even if the system can be decomposed into parts with more structured behavior. Second, out of all possible probability distributions with the same variance, the normal distribution encodes the maximum amount of uncertainty over the real numbers. We can thus think of the normal distribution as being the one that inserts the least amount of prior knowledge into a model. Fully developing and justifying this idea requires more mathematical tools and is postponed to section 19.4.2.Tags: Mathematical Foundations for Data Science,

Wednesday, 22 February 2023

Bernoulli Distribution and Binomial Distribution

Bernoulli Distribution

From the book: Deep Learning by Ian GoodfellowBinomial Probability Distribution

"pmf" is "Probability Mass Function" or "Probability Distribution". "rv" is "Random Variable". Note: Binomial Distribution is a Discrete Distribution. Visualization of Binomial Distribution Difference between binomial distribution and Bernoulli distribution The Bernoulli distribution represents the success or failure of a single Bernoulli trial. The Binomial Distribution represents the number of successes and failures in n independent Bernoulli trials for some given value of n. Difference Between Normal and Binomial Distribution The main difference is that normal distribution is continous whereas binomial is discrete, but if there are enough data points it will be quite similar to normal distribution with certain loc and scale.

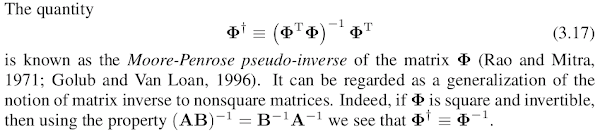

Moore Penrose Pseudoinverse

Moore-Penrose Pseudoinverse is a linear algebra technique used to approximate the inverse of non-invertible matrices. This technique can approximate the inverse of any matrix, regardless of whether the matrix is square or not. In short, Pseudo-inverse exists for all matrices. If a matrix has an inverse, its pseudo-inverse equals its inverse. From the book: Deep Learning by Ian Goodfellow From the book: Pattern Matching by Christopher BishopTags: Mathematical Foundations for Data Science,

Monday, 20 February 2023

Cartesian and Polar Representation of 2D Vector

Simple Cartesian Representation of Vector

A vector in Cartesian plane representation has two components x and y.

x-component: y-component:Vector is:

Simple Polar Representation of Vector

A 2d vector in Polar representation has components angle and magnitude.

Angle: Magnitude:Vector is:

Thursday, 16 February 2023

Problem on Derangement Theorem

Ques: There are four balls of different colors and four boxes of the same colors as of the balls. Find the number of ways in which the balls, one in each of the box, could be placed such that the ball does not go to the box of its own color.

Ans: This problem comes directly from the 'derangement theorem'.

In combinatorial mathematics, a derangement is a permutation of the elements of a set, such that no element appears in its original position.

If A, B, C, D are four balls and a, b, c, d are four boxes then derangements are:

Let the number of balls be:

Then, the balls are:

And, the boxes are:

All derangements of the balls are:

{{generateDerangements()}}

The number of derangements of 'n' balls is equal to: round(n!/e) = {{generateDerangementsCount()}}

Deriving Derangement Theorem

Deriving Derangement Theorem

The growth of both the functions n! (factorial) and !n (derangement) is exponential, look at the table of values below:

| n | Permutation | Derangement |

| 2 | 2 | 1 |

| 3 | 6 | 2 |

| 4 | 24 | 9 |

| 5 | 120 | 44 |

| 6 | 720 | 265 |

| 7 | 5040 | 1854 |

We will work with the log (base Math.E) of these functions. Look at the table of values below:

| n | log(Permutation) | log(Derangement) |

| 2 | 0.693 | 0 |

| 3 | 1.791 | 0.693 |

| 4 | 3.178 | 2.197 |

| 5 | 4.787 | 3.784 |

| 6 | 6.5792 | 5.5797 |

| 7 | 8.5251 | 7.5251 |

We see the following relationship between these values:

log(!n) = log(n!) - 1

=> log(!n) = log(n!) - log(e)

=> log(!n) = log(n! / e)

=> !n = n! / e

And true relationship between !n and n! is: !n = round(n! / e)

Test this out by adding data to the logarithmic plot showing the relationship between n! and !n below:

Tags: Mathematical Foundations for Data Science,

Subscribe to:

Comments (Atom)